Setting up a database

Introduction

In this assignment, you'll perform few basic tasks needed to create a database. You'll design the tables create it, add indexes to them and of course connect everything with appropriate relations. You also learn how to use LOAD DB2 Utility. The database we'll create will be used for storing RMF performance data. It will consist of three tables: - LPARS – Each record stores some basic information about a single LPAR. - PERF_RMF_INT – Each record represents RMF performance data from a single interval. It depends on RMF setting, usually, RMF saves the data each 10, 15 or 30 minutes. - PERF_DAY_AVG – Each record stores daily average values of selected columns from PERF_RMF_INT table. Before starting this Assignment contact your database administrator or RACF support (depending on how the DB2 is protected) in order to get appropriate authorization for DB2 operations.

Tasks

![]() 1. Create a database and a single tablespace in it.

1. Create a database and a single tablespace in it.

![]() 2. Design three tables described in the introduction: LPARS, PERF_RMF_INT and PERF_DAY_AVG.

- Hint 2 contains JCL code for a sample RMF job that extracts fields that will be stored in PERF_RMF_INT table. You can model your tables on it or modify the job and then design the table accordingly. It is recommended you use the same job since solutions of many later Tasks will depend on the data gathered by RMF.

- There are many free tools in which you can create rational database diagrams and then generate SQL for the database creation. You'll save a lot of time this way.

2. Design three tables described in the introduction: LPARS, PERF_RMF_INT and PERF_DAY_AVG.

- Hint 2 contains JCL code for a sample RMF job that extracts fields that will be stored in PERF_RMF_INT table. You can model your tables on it or modify the job and then design the table accordingly. It is recommended you use the same job since solutions of many later Tasks will depend on the data gathered by RMF.

- There are many free tools in which you can create rational database diagrams and then generate SQL for the database creation. You'll save a lot of time this way.

![]() 3. Create tables designed in Task#2 and join them with appropriate relations.

3. Create tables designed in Task#2 and join them with appropriate relations.

![]() 4. Manually insert two records into LPAR table.

4. Manually insert two records into LPAR table.

![]() 5. Run RMF job that creates Overview report from the selected system resources.

- Sample JCL code is in Hint 2.

- Make sure that RMF control statements match columns in your database.

5. Run RMF job that creates Overview report from the selected system resources.

- Sample JCL code is in Hint 2.

- Make sure that RMF control statements match columns in your database.

![]() 6. Reformat RMF report with DFSORT so it can be processed by DB2 LOAD Utility.

6. Reformat RMF report with DFSORT so it can be processed by DB2 LOAD Utility.

![]() 7. Use DB2 LOAD Utility to load RMF data into PERF_RMF_INT table.

7. Use DB2 LOAD Utility to load RMF data into PERF_RMF_INT table.

Hint 1

DB2 documentation is available under the following address: http://www-01.ibm.com/support/docview.wss?uid=swg27039165 For this Task, “DB2 11 for z/OS: SQL Reference” and “DB2 11 for z/OS: Introduction to DB2 for z/OS” are two documents that will be helpful. Be sure to check “Appendix: DB2 catalog tables” in “DB2 11 for z/OS: SQL Reference”. It contains description of build-in DB2 tables which you'll have to get familiar with. When it comes to running SQL queries two tools are usually used: - SPUFI – This is a build-in function of DB2I (DB2 Interactive), the default interface for running SQL queries. - DB2 QMF – Query Management Facility is another popular tool. This assignment does not cover those tools but both of them are easy to figure out.

Hint 2

JCL Code for RMF report:

//SMFSORT EXEC PGM=SORT,REGION=0M //SORTIN DD DISP=SHR,DSN=&SYSUID..SMF.DUMP1 <-- SMF DATA // DD DISP=SHR,DSN=&SYSUID..SMF.DUMP2 //SORTOUT DD DISP=(,PASS),SPACE=(CYL,(200,200),RLSE),DSN=&PLXG //SORTWK01 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK02 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK03 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK04 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK05 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK06 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK07 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SORTWK08 DD DISP=(NEW,DELETE),UNIT=3390,SPACE=(CYL,(200)) //SYSPRINT DD SYSOUT=* //SYSOUT DD SYSOUT=* //SYSIN DD * SORT FIELDS=(11,4,CH,A,7,4,CH,A),EQUALS MODS E15=(ERBPPE15,36000,,N),E35=(ERBPPE35,3000,,N) //RMFPP EXEC PGM=ERBRMFPP,REGION=512M //MFPINPUT DD DSN=&PLXG,DISP=(OLD,PASS) //MFPMSGDS DD SYSOUT=* //PPOVWREC DD DISP=(,CATLG),DSN=&SYSUID..RMF.FULL, <-- OUTPUT // SPACE=(CYL,(50,50),RLSE),BLKSIZE=0,UNIT=SYSDA //PPORP001 DD SYSOUT=* //SYSPRINT DD SYSOUT=* //SYSOUT DD SYSOUT=* //SYSIN DD * <-- SUPPLY LPAR NAME DATE(07312017,08062017) OVERVIEW(RECORD,REPORT) NOSUMMARY /* CPU */ OVW(CPUBSY(CPUBSY)) /* PHYSICAL CP BUSY */ OVW(IIPBSY(IIPBSY)) /* PHYSICAL ZIIP BUSY */ OVW(MVSBSY(MVSBSY)) /* MVS CP BUSY */ OVW(IIPMBSY(IIPMBSY)) /* MVS ZIIP BUSY */ OVW(AVGWUCP(AVGWUCP)) /* INREADY QUEUE FOR CP */ OVW(AVGWUIIP(AVGWUIIP)) /* INREADY QUEUE FOR ZIIP */ OVW(NLDEFL(NLDEFL(PZ13))) /* DEF PROCESSORS IN PARTITION */ OVW(NLDEFLCP(NLDEFLCP(PZ13))) /* DEF CP IN PARTITION */ OVW(NLDEFLIP(NLDEFLIP(PZ13))) /* DEF ZIIP IN PARTITION */ OVW(NLACTL(NLACTL(PZ13))) /* ACTUAL PROCESSORS IN PARTITION */ OVW(NLACTLCP(NLACTLCP(PZ13))) /* ACTUAL CP IN PARTITION */ OVW(NLACTLIP(NLACTLIP(PZ13))) /* ACTUAL ZIIP IN PARTITION */ /* MSU */ OVW(WDEFL(WDEFL(PZ13))) /* DEF WEIGHTS FOR CP */ OVW(WDEFLIIP(WDEFLIIP(PZ13))) /* DEF WEIGHTS FOR ZIIP */ OVW(WACTL(WACTL(PZ13))) /* ACTUAL WEIGHTS FOR CP */ OVW(LDEFMSU(LDEFMSU(PZ13))) /* DEFINED MSU */ OVW(LACTMSU(LACTMSU(PZ13))) /* USED MSU */ OVW(WCAPPER(WCAPPER(PZ13))) /* WLM CAPPING */ OVW(INICAP(INICAP(PZ13))) /* INITIAL CAPPING FOR THE CP */ OVW(LIMCPU(LIMCPU(PZ13))) /* PHYSICAL HW CAPACITY LIMIT */ /* STORAGE */ OVW(STOTOT(STOTOT(POLICY))) /* USED CENTRAL STORAGE */ OVW(STORAGE(STORAGE)) /* AVAILABLE CENTRAL STORAGE */ OVW(TPAGRT(TPAGRT)) /* PAGE-INS & PAGE-OUTS PER SEC */ OVW(PAGERT(PAGERT)) /* PAGE FAULTS */ OVW(PGMVRT(PGMVRT)) /* PAGE MOVE RATE */ OVW(CSTORAVA(CSTORAVA)) /* AVG NUMBER OF ALL FRAMES */ OVW(AVGSQA(AVGSQA)) /* AVG NUMBER OF SQA FIXED FRAMES */ OVW(AVGCSAF(AVGCSAF)) /* AVG NUMBER OF CSA FIXED FRAMES */ OVW(AVGCSAT(AVGCSAT)) /* AVG NUMBER OF ALL CSA FRAMES */ /* I/O */ OVW(IOPIPB(IOPIPB)) /* I/O PROCESOR BUSY PERCENT */ OVW(IORPII(IORPII)) /* RATE OF PROCESSED I/O INTERRUPTS */ OVW(IOPALB(IOPALB)) /* PERCENT OF I/O RETRIES */

RMF overview reports saves extracted data in form of columns and rows so just like a table in relational database. Thanks to that you can easily map OVW statements into table definition. The easiest and fastest way to design table diagram is to use one of the freeware database designers. Each of them provides graphical interface through which you can create your tables and relationships between them. Make sure that the tool you're using supports DB2 SQL version.

Hint 3

Each Database Design Tool can generate SQL code from the diagram you've created. Basically there are four things to do: - Tables creation. - Indexes creation. - Primary keys definition. - Foreign keys definition.

Hint 6

You'll have to omit some records and also at least one column. You'll also have to reformat Date format in RMF report so it's in format readable by DB2 LOAD Utility. See OMIT & OUTREC control statements of DFSORT Utility. It's documented in “z/OS DFSORT Application Programming Guide”.

Hint 7

In “DB2 11 for z/OS: Introduction to DB2 for z/OS” there is "Chapter 10. DB2 basics tutorial" where LOAD Utility is briefly described. For more details be sure to check "Db2 11 for z/OS: Utility Guide and Reference".

Solution 1

The first thing to do is to ensure you have an appropriate authorization to the DB2 subsystem. DB2 can be protected in two ways, via DB2 Authorization Table or RACF profiles. Depending on that it may be controlled either by DBDC or RACF team, you may need to contact them to be able to finish this Assignment. Creation of database is very simple. This is only it's definition so we don't have to worry about details. CREATE DATABASE JANSDB; You can display all databases defined in particular DB2 subsystem with SQL command: SELECT * FROM SYSIBM.SYSDATABASE; Table space is a physical file (VSAM LDS) in which your tables and the data in them will be stored. You can create it as follows:

CREATE TABLESPACE JANSTS1 IN JANSDB USING STOGROUP SYSDEFLT PRIQTY 3000 SECQTY 3000 ERASE YES CCSID ASCII LOCKSIZE ROW;

STOGROUP has nothing to do with SMS Storage group. This is simply a list of volumes which DB2 will use for allocating your table space. You can display available storage groups with: SELECT * FROM SYSIBM.SYSSTOGROUP; To display what volume will be chosen by selecting SYSDEFLT group you can issue: SELECT * FROM SYSIBM.SYSVOLUMES WHERE SGNAME='SYSDEFLT'; If you have access do “DB2 Admin” you can also view Catalog Tables via option 1 (DB2 system catalog). PRIQTY and SECQTY define how large your table space can be. They are specified in Pages, a Page can have from 4kB up to 32kB, 4kB is the default.

Solution 2

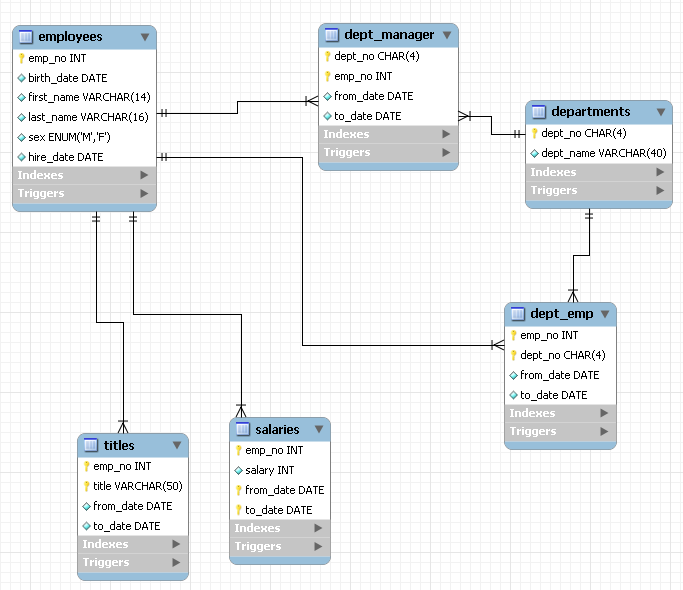

Three tables have to be created: - LPAR - PERF_RMF_INT - PERF_DAY_AVG First one is for general information about LPAR and the other two are for storing data extracted by the job included in Hint 2. First let's check what built-in data types are available in DB2: Numeric: - SMALLINT - -32768 to +32767 - INTEGER - -2147483648 to +2147483647 - BIGINT - -9223372036854775808 to +9223372036854775807 - REAL - 32 bit floating point - DOUBLE - (or FLOAT) 64 bit floating point - DECIMAL - (or NUMERIC) decimal floating point - max 31 digits - DECFLOAT - decimal floating point - max 34 digits String: - CHAR(n) - fixed length string (max 255 characters) - VARCHAR(n) - variable length string (max 32704 characters) - CLOB(n) - variable length string (max 2147483647 characters) - GRAPHIC(n) - fixed length double-byte string (max 127 characters) - VARGRAPHIC(n) - variable length double-byte string (max 16352 characters) - DBCLOB(n) - variable length double-byte string (max 1073741823 characters) - BINARY(n) - fixed length binary string (max 127 characters) - VARBINARY(n) - variable length binary string (max 32704 characters) - BLOB(n) - variable length binary string (max 2147483647 characters) Date and time - DATE - Date - TIME - Time - TIMESTAMP WITHOUT TIME ZONE - Date & Time & Fractional (GMT time zone) - TIMESTAMP WITH TIME ZONE - Date & Time & Fractional (Local time zone) OTHER: - ROWID - ID of a record - XML - Formatted XML string (max 2147483647 characters) In “Data Types” chapter of “DB2 11 for z/OS: SQL Reference” there is a table that nicely shows all built-in data types. Here are few remarks regarding types: 1. User created types are also supported, they're called Distinct types. 2. The difference between three types of strings: - Normal character strings - For storing data in ASCII or EBCDIC format. The format can be defined in data base, table space or table definition. - Double-byte character strings - For storing data in UTF-16 format. - Binary character strings - For storing binary data, for example sounds or pictures. 3. Binary vs Decimal representation of numbers: - Floating point numbers are not precise. This is the main reason why Decimal are recommended. - When moving data to system other than mainframe floating point numbers may be coded differently. In such case, conversion is needed. - Mainframes processors have special hardware circuits for processing Decimal numbers while other processors must first convert them to a binary form. This means that if you need to process a lot of data on systems other than mainframe floating point calculations are faster. - Floating-point numbers take less storage than decimal. - DECFLOAT is simply a DECIMAL representation with more precision. It is a relatively new type, added in DB2 9, because of that it's best to stick with DECIMAL for compatibility reasons. Database diagram (made with use of dbdiffo.com online editor):

As you can see PERF_RMF_INT table will store all the information extracted by RMF job. PERF_DAY_AVG stores only selected columns. The idea is simple, PERF_RMF_INT will store all RMF intervals for like a month of two. This way a System Programmer will be able to easily analyze the detailed performance data from the last few weeks/months. RMF itself retains such data only for a few days. PERF_DAY_AVG is a table in which one row represents a single day, this data will be stored for a longer period, for example 5 years. It's standard practice to store some SMF data for many years, for example MIPS & MSU usage, SMF record 89 etc. Such data may be needed for pricing software or long term performance analysis. There are three Foreign Key constraints between those tables: - DAY_AVG_FK_LPAR – 1 to 0-n – one LPAR can have zero or more PERF_DAY_AVG records and each PERF_DAY_AVG record must be connected to a single LPAR record. - RMF_INT_FK_LPAR – 1 to 0-n – one LPAR can have zero or more PERF_RMF_INT records and each PERF_RMF_INT record must be connected to a single LPAR record. - RMF_INT_FK_DAY_AVG - 0,1 to 0-n – one PERF_DAY_AVG record may have zero or more PERF_RMF_INT records and one PERF_RMF_INT record may or may not be connected to a single RMF_DAY_AVG record.

Solution 3

SQL code for LPAR table:

CREATE TABLE LPAR ( LPAR_ID SMALLINT NOT NULL GENERATED BY DEFAULT AS IDENTITY (START WITH 1, INCREMENT BY 1), LPAR_NAME VARCHAR(4) NOT NULL, CPU_MODEL VARCHAR(10) NOT NULL, Z_OS_VERSION VARCHAR(6) NOT NULL, MAINTANANCE_LEVEL DATE NOT NULL, KEEP_RMFINT_FOR_X_DAYS SMALLINT NOT NULL DEFAULT 40, KEEP_DAYAVG_FOR_X_DAYS SMALLINT NOT NULL DEFAULT 2000, COMPANY_NAME VARCHAR(50), COMMENTS VARCHAR(500) ) IN JANSDB.JANSTS1; CREATE UNIQUE INDEX IX1_LPAR ON LPAR (LPAR_ID ASC) USING STOGROUP SYSDEFLT PRIQTY 64 SECQTY 64 ERASE YES BUFFERPOOL BP2 CLOSE YES; ALTER TABLE LPAR ADD CONSTRAINT LPAR_ID PRIMARY KEY (LPAR_ID);

SQL code was generated automatically by “dbdiffo.com” website, there are two modifications: - IN JANSDB.JANSTS1 – remember to add it in CREATE TABLE statement, otherwise DB2 will create the table in new automatically generated database. - CREATE UNIQUE INDEX – creating index is optional and not really needed in this assignment but all production databases use Indexes so it's good habit to always create it, just to get used to it. SQL code for PERF_DAY_AVG table:

CREATE TABLE PERF_DAY_AVG ( PERF_DAY_AVG_ID INTEGER NOT NULL GENERATED BY DEFAULT AS IDENTITY (START WITH 1, INCREMENT BY 1), LPAR_ID SMALLINT NOT NULL, DATE DATE NOT NULL, CPUBSY DECIMAL(6,3) NOT NULL DEFAULT 0, IIPBSY DECIMAL(6,3) NOT NULL DEFAULT 0, MVSBSY DECIMAL(6,3) NOT NULL DEFAULT 0, IIPMBSY DECIMAL(6,3) NOT NULL DEFAULT 0, AVGWUCP DECIMAL(6,3) NOT NULL DEFAULT 0, AVGWUIIP DECIMAL(6,3) NOT NULL DEFAULT 0, WDEFL DECIMAL(6,0) NOT NULL DEFAULT 0, WDEFLIIP DECIMAL(6,0) NOT NULL DEFAULT 0, WACTL DECIMAL(6,0) NOT NULL DEFAULT 0, LDEFMSU DECIMAL(6,0) NOT NULL DEFAULT 0, LACTMSU DECIMAL(6,0) NOT NULL DEFAULT 0, STOTOT DECIMAL(15,0) NOT NULL DEFAULT 0, TPAGRT DECIMAL(15,3) NOT NULL DEFAULT 0, IORPII DECIMAL(15,3) NOT NULL DEFAULT 0, IOPALB DECIMAL(15,3) NOT NULL DEFAULT 0 ) IN JANSDB.JANSTS1; CREATE UNIQUE INDEX IX1_PERF_DAY_AVG ON PERF_DAY_AVG (PERF_DAY_AVG_ID ASC) USING STOGROUP SYSDEFLT PRIQTY 64 SECQTY 64 ERASE YES BUFFERPOOL BP2 CLOSE YES; ALTER TABLE PERF_DAY_AVG ADD CONSTRAINT PERF_DAY_AVG_ID PRIMARY KEY (PERF_DAY_AVG_ID);

SQL code for PERF_RMF_INT table:

CREATE TABLE PERF_RMF_INT ( PERF_RMF_INT_ID INTEGER NOT NULL GENERATED BY DEFAULT AS IDENTITY (START WITH 1, INCREMENT BY 1), LPAR_ID SMALLINT NOT NULL, PERF_DAY_AVG_ID INTEGER, DATE DATE NOT NULL, TIME TIME NOT NULL, CPUBSY DECIMAL(6,3) NOT NULL DEFAULT 0, IIPBSY DECIMAL(6,3) NOT NULL DEFAULT 0, MVSBSY DECIMAL(6,3) NOT NULL DEFAULT 0, IIPMBSY DECIMAL(6,3) NOT NULL DEFAULT 0, AVGWUCP DECIMAL(6,3) NOT NULL DEFAULT 0, AVGWUIIP DECIMAL(6,3) NOT NULL DEFAULT 0, NLDEFL DECIMAL(3,0) NOT NULL DEFAULT 0, NLDEFLCP DECIMAL(3,0) NOT NULL DEFAULT 0, NLDEFLIP DECIMAL(3,0) NOT NULL DEFAULT 0, NLACTL DECIMAL(3,0) NOT NULL DEFAULT 0, NLACTLCP DECIMAL(3,0) NOT NULL DEFAULT 0, NLACTLIP DECIMAL(3,0) NOT NULL DEFAULT 0, WDEFL DECIMAL(6,0) NOT NULL DEFAULT 0, WDEFLIIP DECIMAL(6,0) NOT NULL DEFAULT 0, WACTL DECIMAL(6,0) NOT NULL DEFAULT 0, LDEFMSU DECIMAL(6,0) NOT NULL DEFAULT 0, LACTMSU DECIMAL(6,0) NOT NULL DEFAULT 0, WCAPPER DECIMAL(6,0) NOT NULL DEFAULT 0, INICAP DECIMAL(6,0) NOT NULL DEFAULT 0, LIMCPU DECIMAL(6,0) NOT NULL DEFAULT 0, STOTOT DECIMAL(15,0) NOT NULL DEFAULT 0, STORAGE DECIMAL(15,0) NOT NULL DEFAULT 0, TPAGRT DECIMAL(15,3) NOT NULL DEFAULT 0, PAGERT DECIMAL(15,3) NOT NULL DEFAULT 0, PGMVRT DECIMAL(15,3) NOT NULL DEFAULT 0, CSTORAVA DECIMAL(15,0) NOT NULL DEFAULT 0, AVGSQA DECIMAL(15,0) NOT NULL DEFAULT 0, AVGCSAF DECIMAL(15,0) NOT NULL DEFAULT 0, AVGCSAT DECIMAL(15,0) NOT NULL DEFAULT 0, IOPIPB DECIMAL(6,3) NOT NULL DEFAULT 0, IORPII DECIMAL(15,3) NOT NULL DEFAULT 0, IOPALB DECIMAL(15,3) NOT NULL DEFAULT 0 ) IN JANSDB.JANSTS1; CREATE UNIQUE INDEX IX1_PERF_RMF_INT ON PERF_RMF_INT (PERF_RMF_INT_ID ASC) USING STOGROUP SYSDEFLT PRIQTY 64 SECQTY 64 ERASE YES BUFFERPOOL BP2 CLOSE YES; ALTER TABLE PERF_RMF_INT ADD CONSTRAINT PERF_RMF_INT_ID PRIMARY KEY (PERF_RMF_INT_ID);

SQL code for creating Foreign Key constraints:

ALTER TABLE PERF_DAY_AVG ADD CONSTRAINT DAY_AVG_FK_LPAR FOREIGN KEY (LPAR_ID) REFERENCES LPAR (LPAR_ID); ALTER TABLE PERF_RMF_INT ADD CONSTRAINT RMF_INT_FK_DAY_AVG FOREIGN KEY (PERF_DAY_AVG_ID) REFERENCES PERF_DAY_AVG (PERF_DAY_AVG_ID); ALTER TABLE PERF_RMF_INT ADD CONSTRAINT RMF_INT_FK_LPAR FOREIGN KEY (LPAR_ID) REFERENCES LPAR (LPAR_ID);

In DB2 Admin tool, Databases panel you can now view the configuration of your newly create database. Use '?' line commands to see all actions you can perform in this panel, here are few of the more useful ones: - A - Authorizations on database. - DDL - Generate SQL for the database only – It generates SQL code for creating selected database. Useful when you want to model your database on another existing database. - DIS - Display database status “-DIS DB(JANSDB) SPACE(*) LIMIT(*)”. - DISC - Display information about SQL claimers “-DIS DB(JANSDB) SPACE(*) CLAIMERS LIMIT(*)” - DISL - Display information about locks “-DIS DB(JANSDB) SPACE(*) LOCKS LIMIT(*)”. - DISU - Display information about current use “-DIS DB(JANSDB) SPACE(*) USE LIMIT(*)”. - DSP - Display database structure with plans and packages. A nice way to see the strucutre of the entire database:

PSID/ Type Object Name Owner DBID ISOBID OBID Note * * * * * * * ------- -----------------------> -------- ------ ------ ------ ----------- D------ JANSDB------------------ JSADEK 338 0 0 S JANSTS1 JSADEK 338 2 1 Segmented T LPAR JSADEK 338 0 5 CHR DAY_AVG_FK_LPAR 0 0 14 Child CHR RMF_INT_FK_LPAR 0 0 16 Child UC LPAR_ID 0 0 0 Primary key X IX1_LPAR JSADEK 338 7 6 T PERF_DAY_AVG JSADEK 338 0 8 CHR RMF_INT_FK_DAY_AVG 0 0 15 Child PAR DAY_AVG_FK_LPAR 0 0 14 Parent UC PERF_DAY_AVG_ID 0 0 0 Primary key X IX1_PERF_DAY_AVG JSADEK 338 10 9 T PERF_RMF_INT JSADEK 338 0 11 PAR RMF_INT_FK_DAY_AVG 0 0 15 Parent PAR RMF_INT_FK_LPAR 0 0 16 Parent UC PERF_RMF_INT_ID 0 0 0 Primary key X IX1_PERF_RMF_INT JSADEK 338 13 12

- GEN - Generate SQL for database from DB2 catalog. This command generates a job that in turn generates SQL code for creating all objects in the database. E.g. database, table spaces, index spaces, tables, constraints and so on. - REP – Generate report from the DB2 catalog. With the job generated by this command you can easily display all objects in the database, tables, indexed, columns etc.

Solution 4

SQL code:

INSERT INTO LPAR ( LPAR_NAME, CPU_MODEL, Z_OS_VERSION, MAINTANANCE_LEVEL, KEEP_RMFINT_FOR_X_DAYS, COMPANY_NAME ) VALUES( 'MVS1', '2097.705', '2.2', '2017-06-22', 80, 'TEST SYSTEM' );

Not all values are given. LPAR_ID will be automatically calculated. KEEP_DAYAVG_FOR_X_DAYS has a default value of 2000 and COMMENTS are optional. DB2 QMF provides interface through which you can insert records in even easier way. Instead of selecting F6 (Query) we'll use F8 (Edit Table):

LPAR_ID . . . . . . . ( 2 ) LPAR_NAME . . . . . . ( MVS2 ) CPU_MODEL . . . . . . ( 2097.705 ) Z_OS_VERSION. . . . . ( 2.3 ) MAINTANANCE_LEVEL . . ( 13.03.2017 ) KEEP_RMFINT_FOR_X_DAY ( 80 ) KEEP_DAYAVG_FOR_X_DAY ( 2000 ) COMPANY_NAME. . . . . ( ANOTHER TEST SYSTEM ) COMMENTS. . . . . . . ( IF YOU'RE VIEWING THIS RECORD YOU MUST BE SO BORED >

If your field is larger than the panel like in the case of COMMENTS field you can use F5 to expand it. Unfortunately in that panel you must supply all values that are NOT NULL, even if they have default value or are auto-incremental like LPAR_ID.

Solution 5

RMF job included in Hint 2 actually generates two reports, one for the LPAR (the first half) and one for the entire Sysplex (the second half). In this job, only one field STOTOT(POLICY) extracts Sysplex wide data, therefore in the second report there should be only one column:

08/14 01.50.00 00.10.00 39.4 24.5 99.0 24.3 08/14 02.00.00 00.09.59 39.5 13.6 99.6 13.3 792 ZV020200 2350002017218 100000.09.59 ..H[...*............ 08/06 23.50.00 00.10.00 2593146 08/07 00.00.00 00.10.00 2528790 08/07 00.10.00 00.09.59 2532396

Unfortunately there is no possibility to skip this data in RMF job. We must exclude this data with DFSORT.

Solution 6

In general following job does four things: - Omits header and the second part of RMF report which we don't need. - Skips the third column (RMF interval) since it won't be loaded into PERF_RMF_INT table. - Adds LPAR_ID as the first column. This is a mandatory field in PERF_RMF_INT table and must be available in input for the LOAD Utility. If you use newer version of DB2 you can use CONSTANT control statement in LOAD Utility instead. - Changes date format from 'MM/DD' to 'YYYY-MM-DD'.

// EXPORT SYMLIST=(YEAR) // SET INPUT=&SYSUID..RMF.FULL // SET OUTPUT=&SYSUID..RMF.FULL.REORG // SET YEAR=2017 //SORT EXEC PGM=SORT //SORTIN DD DISP=SHR,DSN=&INPUT //SORTOUT DD DSN=&OUTPUT,SPACE=(TRK,(90,90),RLSE),DISP=(NEW,CATLG) //SYSOUT DD SYSOUT=* //SYSIN DD *,SYMBOLS=EXECSYS OMIT COND=(9,8,CH,EQ,C'RMFOVREC',OR,5,2,BI,LT,60) SORT FIELDS=COPY OUTREC FIELDS=(1,4,C'3 ',C'&YEAR.-',21,2,C'-',24,2,26,9,44,321)

Solution 7

JCL Code:

//UTILLOAD EXEC DSNUPROC,SYSTEM=DB21,UID='JSADEK.UT.LOAD' <-- DB2 SYS //SYSUT1 DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- SORT WRK DS //SORTWK01 DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- SORT WRK DS //SORTWK02 DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- SORT WRK DS //SORTWK03 DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- SORT WRK DS //SORTOUT DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- SORT WRK DS //SYSMAP DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- MAPPING WRK //SYSERR DD DISP=(,PASS),SPACE=(CYL,(20,80)) <-- ERROR WRK //RNPRIN01 DD SYSOUT=* <-- SORT MESSAGES //STPRIN01 DD SYSOUT=* <-- SORT MESSAGES //UTPRINT DD SYSOUT=* <-- SORT MESSAGES //SYSDISC DD SYSOUT=* <-- RECORDS NOT LOADED BY THE UTILITY //SYSPRINT DD SYSOUT=* <-- DB2 UTIL OUTPUT MESSAGES //SYSREC DD DISP=SHR,DSN=SYSU.RMF.FULL.REORG <-- INPUT //SYSIN DD * <-- CONTROL STATEMENTS LOAD DATA INTO TABLE JSADEK.PERF_RMF_INT ( LPAR_ID POSITION (1:1) INTEGER EXTERNAL , DATE POSITION (3:12) DATE EXTERNAL , TIME POSITION (14:21) TIME EXTERNAL , CPUBSY POSITION (22:32) DECIMAL EXTERNAL , IIPBSY POSITION (33:42) DECIMAL EXTERNAL , MVSBSY POSITION (43:52) DECIMAL EXTERNAL , IIPMBSY POSITION (53:62) DECIMAL EXTERNAL , AVGWUCP POSITION (63:72) DECIMAL EXTERNAL , AVGWUIIP POSITION (73:82) DECIMAL EXTERNAL , NLDEFL POSITION (83:92) DECIMAL EXTERNAL , NLDEFLCP POSITION (93:102) DECIMAL EXTERNAL , NLDEFLIP POSITION (103:112) DECIMAL EXTERNAL , NLACTL POSITION (113:122) DECIMAL EXTERNAL , NLACTLCP POSITION (123:132) DECIMAL EXTERNAL , NLACTLIP POSITION (133:142) DECIMAL EXTERNAL , WDEFL POSITION (143:152) DECIMAL EXTERNAL , WDEFLIIP POSITION (153:162) DECIMAL EXTERNAL , WACTL POSITION (163:172) DECIMAL EXTERNAL , LDEFMSU POSITION (173:182) DECIMAL EXTERNAL , LACTMSU POSITION (183:192) DECIMAL EXTERNAL , WCAPPER POSITION (193:202) DECIMAL EXTERNAL , INICAP POSITION (203:212) DECIMAL EXTERNAL , LIMCPU POSITION (213:222) DECIMAL EXTERNAL , STOTOT POSITION (223:232) DECIMAL EXTERNAL , STORAGE POSITION (233:242) DECIMAL EXTERNAL , TPAGRT POSITION (243:252) DECIMAL EXTERNAL , PAGERT POSITION (253:262) DECIMAL EXTERNAL , PGMVRT POSITION (263:272) DECIMAL EXTERNAL , CSTORAVA POSITION (273:282) DECIMAL EXTERNAL , AVGSQA POSITION (283:292) DECIMAL EXTERNAL , AVGCSAF POSITION (293:302) DECIMAL EXTERNAL , AVGCSAT POSITION (303:312) DECIMAL EXTERNAL , IOPIPB POSITION (313:322) DECIMAL EXTERNAL , IORPII POSITION (323:332) DECIMAL EXTERNAL , IOPALB POSITION (333:342) DECIMAL EXTERNAL ) RESUME YES

Few things to mention regarding this job: - If your data is sorted you can specify PRESORTED=YES. By default LOAD Utility sorts input records. - You should ensure that Primary Key is unique. - You should ensure that values specified as Foreign Keys are present in the connected tables. - RESUME YES – this means that the data is to be loaded even if Table Space is not empty. We already have some data in the Table Space since it's shared by all three tables in our database so we must use this keyword. - EXTERNAL – You must use this keyword if you load data created by some external tool like RMF for instance. - POSITION – specifies in which columns data is stored. In case of DATE & TIME it must be exact (spaces at the beginning/end are not allowed). “2017-01-22” - OK, “ 2017-01-22” - ERROR. In case of numeric fields spaces are trimmed so “ 33.12” field will be read correctly. - SYSTEM – DB2 subsystem prefix. - UID – This is the ID of the Utility execution. It can be anything. You can display it with “/db2pref DIS UTIL(*)” command or via Display/Terminate Utilities panel of DB2 Admin Tool.

DSNU105I -DB21 DSNUGDIS - USERID = JSADEK MEMBER = UTILID = JSADEK.UT.LOAD PROCESSING UTILITY STATEMENT 1 UTILITY = LOAD PHASE = RELOAD COUNT = 0 NUMBER OF OBJECTS IN LIST = 1 LAST OBJECT STARTED = 1 STATUS = ACTIVE

SQL Basics

Introduction

SQL (Structured Query Language) is the universal language used by all relational databases. There are some small differences in SQL language and build-in function depending whether you use DB2, Oracle Database, PostgreSQL etc. There are even some differences between DB2 for z/OS and DB2 for UNIX/Windows so make sure you use proper documentation. Having said that remember that there are hundreds of online SQL courses out there, and most often SQL queries works the same way on all databases. In this Assignment, you'll perform some typical SQL queries but you'll also have to use SQL more creatively to perform some less common and more difficult activities. We'll use database created in “Setting up a database” Assignment. Unless specified otherwise all exercises refer to PERF_RMF_INT table.

Tasks

![]() 1. SELECT statement - Part I:

- Display all records of PERF_RMF_INT table.

- Display all records from PERF_RMF_INT table ordering them from the highest CPU usage (CPUBSY column).

- Display all records where value of MVSBSY field is higher or equal to 80.

- Display records between 8AM and 4PM from a single day.

- Display DATE, TIME and MVSBSY columns where MVSBSY value is greater than 60, sorted from the highest to lowest value.

- Display records in which STOTOT (used RAM) is greater or equal to 70% of STORAGE (total available RAM) value.

- Display DATE, TIME, MVSBSY and IIPMBSY columns ordered by sum of MVSBSY & IIPMBSY values. Retrieve only 50 rows with highest values.

- Use LACTMSU column and the data about CPC power of your system (search web for LSPR ITR ratios) to calculate MIPS usage for each record in PERF_RMF_INT table. Output should contain DATE, TIME, MVSBSY, LACTMSU and MIPS columns. Rename MVSBSY column to CPU and LACTMSU to MSU.

- Display all records from PERF_RMF_INT table from a single LPAR. Use LPAR_NAME column from LPAR table as selection criteria.

- Display all records from PERF_RMF_INT table from a single LPAR that are older than the value specified in KEEP_RMFINT_FOR_X_DAYS field of LPAR table.

1. SELECT statement - Part I:

- Display all records of PERF_RMF_INT table.

- Display all records from PERF_RMF_INT table ordering them from the highest CPU usage (CPUBSY column).

- Display all records where value of MVSBSY field is higher or equal to 80.

- Display records between 8AM and 4PM from a single day.

- Display DATE, TIME and MVSBSY columns where MVSBSY value is greater than 60, sorted from the highest to lowest value.

- Display records in which STOTOT (used RAM) is greater or equal to 70% of STORAGE (total available RAM) value.

- Display DATE, TIME, MVSBSY and IIPMBSY columns ordered by sum of MVSBSY & IIPMBSY values. Retrieve only 50 rows with highest values.

- Use LACTMSU column and the data about CPC power of your system (search web for LSPR ITR ratios) to calculate MIPS usage for each record in PERF_RMF_INT table. Output should contain DATE, TIME, MVSBSY, LACTMSU and MIPS columns. Rename MVSBSY column to CPU and LACTMSU to MSU.

- Display all records from PERF_RMF_INT table from a single LPAR. Use LPAR_NAME column from LPAR table as selection criteria.

- Display all records from PERF_RMF_INT table from a single LPAR that are older than the value specified in KEEP_RMFINT_FOR_X_DAYS field of LPAR table.

![]() 2. SELECT Statement - part II:

- Use LIKE operator to display all records from LPAR table where LPAR_NAME starts with letter 'T' (or any other letter).

- Use LIKE operator to display all records from LPAR table where CUSTOMER_NAME contains string "TEST" word.

- Use LIKE operator to display all records from LPAR table where CUSTOMER_NAME contains 'A' letter on the second position and the entire string has at least three letters.

- Use IN operator to display all records from PERF_RMF_INT table with MVSBSY value equal to 0, 5 or 10.

- Use IN operator to display records from three selected days only, for example 7, 9 and 12 of August.

- Use IN operator to display all records from PERF_RMF_INT table that are related to two selected LPARS (use LPAR_NAME column instead of LPAR_ID).

- Use EXIST statement to display rows from PERF_DAY_AVG with the DATE that's also present in any record from PERF_RMF_INT table.

- Use ANY operator to check which record IDs from PERF_DAY_AVG are referenced in PERF_RMF_INT table.

- Use ALL operator to check if all records in PERF_DAY_AVG from the last month are related to a single LPAR.

- Use BETWEEN operator to display all records from PERF_RMF_INT where MVSBSY values are between 30 and 50 percent.

- Use DISTINCT operator to display all dates present in PERF_RMF_INT table.

2. SELECT Statement - part II:

- Use LIKE operator to display all records from LPAR table where LPAR_NAME starts with letter 'T' (or any other letter).

- Use LIKE operator to display all records from LPAR table where CUSTOMER_NAME contains string "TEST" word.

- Use LIKE operator to display all records from LPAR table where CUSTOMER_NAME contains 'A' letter on the second position and the entire string has at least three letters.

- Use IN operator to display all records from PERF_RMF_INT table with MVSBSY value equal to 0, 5 or 10.

- Use IN operator to display records from three selected days only, for example 7, 9 and 12 of August.

- Use IN operator to display all records from PERF_RMF_INT table that are related to two selected LPARS (use LPAR_NAME column instead of LPAR_ID).

- Use EXIST statement to display rows from PERF_DAY_AVG with the DATE that's also present in any record from PERF_RMF_INT table.

- Use ANY operator to check which record IDs from PERF_DAY_AVG are referenced in PERF_RMF_INT table.

- Use ALL operator to check if all records in PERF_DAY_AVG from the last month are related to a single LPAR.

- Use BETWEEN operator to display all records from PERF_RMF_INT where MVSBSY values are between 30 and 50 percent.

- Use DISTINCT operator to display all dates present in PERF_RMF_INT table.

![]() 3. INSERT statement:

- Insert one row into LPAR table. Specify all fields. Use random values.

- Insert one row into PERF_RMF_INT table. Specify mandatory fields only. Use random values.

- Insert one row into PERF_RMF_INT table. Specify 4 fields: LPAR_ID, DATE, TIME & MVSBSY. LPAR_ID should store value of the last system in LPAR table. DATA & TIME should store current date and time. MVSBSY field should contain the maximum value from MVSBSY column.

- Calculate average value for all columns from PERF_RMF_INT table that are present in PERF_DAY_AVG table. Average should be calculated from a single system and a single day. Insert the result into PERF_DAY_AVG table.

- Insert four rows into PERF_RMF_INT table in a single query. Specify mandatory fields only. Use random values.

3. INSERT statement:

- Insert one row into LPAR table. Specify all fields. Use random values.

- Insert one row into PERF_RMF_INT table. Specify mandatory fields only. Use random values.

- Insert one row into PERF_RMF_INT table. Specify 4 fields: LPAR_ID, DATE, TIME & MVSBSY. LPAR_ID should store value of the last system in LPAR table. DATA & TIME should store current date and time. MVSBSY field should contain the maximum value from MVSBSY column.

- Calculate average value for all columns from PERF_RMF_INT table that are present in PERF_DAY_AVG table. Average should be calculated from a single system and a single day. Insert the result into PERF_DAY_AVG table.

- Insert four rows into PERF_RMF_INT table in a single query. Specify mandatory fields only. Use random values.

![]() 4. UPDATE statement:

- Set KEEP_RMFINT_FOR_X_DAYS value in the last record of LPAR table to 60.

- Nullify COMMENTS field in all records of LPAR table.

- Use INSERT statement to copy first 10 records from yesterday from PERF_RMF_INT table. Next, update MVSBSY and AVGWUCP columns so the copied rows contain ceiling of their original values.

- UPDATE MVSBSY field in the last row of PERF_RMF_INT table. It should store average value of the entire MVSBSY column.

4. UPDATE statement:

- Set KEEP_RMFINT_FOR_X_DAYS value in the last record of LPAR table to 60.

- Nullify COMMENTS field in all records of LPAR table.

- Use INSERT statement to copy first 10 records from yesterday from PERF_RMF_INT table. Next, update MVSBSY and AVGWUCP columns so the copied rows contain ceiling of their original values.

- UPDATE MVSBSY field in the last row of PERF_RMF_INT table. It should store average value of the entire MVSBSY column.

![]() 5. DELETE statement:

- Delete all records from a single day.

- Delete all records where PERF_DAY_AVG_ID is NULL.

- Delete all records from PERF_RMF_INT table with DATE that's not present in any record of PERF_DAY_AVG table.

5. DELETE statement:

- Delete all records from a single day.

- Delete all records where PERF_DAY_AVG_ID is NULL.

- Delete all records from PERF_RMF_INT table with DATE that's not present in any record of PERF_DAY_AVG table.

![]() 6. Build-In functions:

- Display DATE, TIME and DAYS column where DAYS stores number of days between values in DATE column and the current date.

- Display total number of records stored in PERF_RMF_INT table.

- Display number of records in which MVSBSY value is higher than 80% during the day (8:00 - 20:00).

- Display DATE, AVG_MVSBSY and DAY_OF_WEEK columns. AVG_MVSBSY column should display an average of all values in MVSBSY column from a single day. DAY_OF_WEEK column should display day number (1 for Monday, 2 for Tuesday etc.).

- Display DATE, TIME, MVSBSY & DATE_STR columns. Use YEAR, DAYOFYEAR and TO_CHAR functions to convert the date to 'YYYY.DDD' format in DATE_STR column.

- Use DIGITS function against PERF_RMF_INT_ID, MVSBSY & STOTOT columns.

- Use MONTHS_BETWEEN function to calculate how many months passed since April 1921 to December 2013.

- Convert all zeroes in MVSBSY column to 'X' characters.

- Convert spaces in COMPANY_NAME column in LPAR table. First, trim both leading and trailing blanks. Then, convert every space to '-' character. At the end, display COMPANY_NAME on 30 chars adding '.' as filler appearing after the string. For example " TEST COMPANY " should be displayed as "TEST-COMPANY..................".

- Display MVSBSY column values as follows ">>>>>>>>>3.0<<<<<<<<". Field should have 20 chars and the value should be in the middle of the string.

- Display all records in which MVSBSY column that contains '8' number anywhere in its value, for instance '8.0', '38.1' or '12.8'.

6. Build-In functions:

- Display DATE, TIME and DAYS column where DAYS stores number of days between values in DATE column and the current date.

- Display total number of records stored in PERF_RMF_INT table.

- Display number of records in which MVSBSY value is higher than 80% during the day (8:00 - 20:00).

- Display DATE, AVG_MVSBSY and DAY_OF_WEEK columns. AVG_MVSBSY column should display an average of all values in MVSBSY column from a single day. DAY_OF_WEEK column should display day number (1 for Monday, 2 for Tuesday etc.).

- Display DATE, TIME, MVSBSY & DATE_STR columns. Use YEAR, DAYOFYEAR and TO_CHAR functions to convert the date to 'YYYY.DDD' format in DATE_STR column.

- Use DIGITS function against PERF_RMF_INT_ID, MVSBSY & STOTOT columns.

- Use MONTHS_BETWEEN function to calculate how many months passed since April 1921 to December 2013.

- Convert all zeroes in MVSBSY column to 'X' characters.

- Convert spaces in COMPANY_NAME column in LPAR table. First, trim both leading and trailing blanks. Then, convert every space to '-' character. At the end, display COMPANY_NAME on 30 chars adding '.' as filler appearing after the string. For example " TEST COMPANY " should be displayed as "TEST-COMPANY..................".

- Display MVSBSY column values as follows ">>>>>>>>>3.0<<<<<<<<". Field should have 20 chars and the value should be in the middle of the string.

- Display all records in which MVSBSY column that contains '8' number anywhere in its value, for instance '8.0', '38.1' or '12.8'.

![]() 7. GROUP BY & HAVING statements:

- Display DATE, MVSBSY and STOTOT columns. Calculate average values of MVSBSY and STOTOT columns so one record represents a single day.

- Display DATE and INT_CNT columns where INT_CNT is the number of records for each day.

- Display DATE, MVSBSY and LACTMSU columns. Calculate average of MVSBSY column and sum of LACTMSU column so one row represents one day. Display only days in which all intervals are present.

- Display DATE, MVSBSY and LACTMSU columns. Calculate average of MVSBSY column and sum of LACTMSU column so one row represent working hours of a single day (8AM – 4PM).

- Use LACTMSU column and the data about CPC power of your system (LSPR ITR ratios) to calculate MIPS usage for each record in PERF_RMF_INT table. Calculate sum of MSU column and the average of CPU and MIPS columns for each day. Also, add another column INT_CNT which shows how many records are present for each day.

- Delete records from PERF_DAY_AVG table that don't have all corresponding intervals (records with the same date) in PERF_RMF_INT table (96 or 144 records depending on RMF setting) but only if those records are from the last 7 days. Due to Foreign Key constraint you'll also have to remove all records from PERF_RMF_INT table that reference records from PERF_DAY_AVG you want to remove.

7. GROUP BY & HAVING statements:

- Display DATE, MVSBSY and STOTOT columns. Calculate average values of MVSBSY and STOTOT columns so one record represents a single day.

- Display DATE and INT_CNT columns where INT_CNT is the number of records for each day.

- Display DATE, MVSBSY and LACTMSU columns. Calculate average of MVSBSY column and sum of LACTMSU column so one row represents one day. Display only days in which all intervals are present.

- Display DATE, MVSBSY and LACTMSU columns. Calculate average of MVSBSY column and sum of LACTMSU column so one row represent working hours of a single day (8AM – 4PM).

- Use LACTMSU column and the data about CPC power of your system (LSPR ITR ratios) to calculate MIPS usage for each record in PERF_RMF_INT table. Calculate sum of MSU column and the average of CPU and MIPS columns for each day. Also, add another column INT_CNT which shows how many records are present for each day.

- Delete records from PERF_DAY_AVG table that don't have all corresponding intervals (records with the same date) in PERF_RMF_INT table (96 or 144 records depending on RMF setting) but only if those records are from the last 7 days. Due to Foreign Key constraint you'll also have to remove all records from PERF_RMF_INT table that reference records from PERF_DAY_AVG you want to remove.

![]() 8. WITH clause:

- Display DATE, TIME, MVSBSY and LPAR_NAME columns. Use WITH clause to join LPAR_NAME column from LPAR table with the main query.

- Display count of records in which MVSBSY value is higher than 80% during the day (8:00 - 20:00) in comparison to count of records in which MVSBSY value is higher than 80% during the night (20:00 - 8:00). Use WITH clause to display both values in a single row.

- Display DATE, DAY_NBR, DAY_NAME & AVG_MVSBSY columns. AVG_MVSBSY column is an average MVSBSY value from a specific day. DAY_NBR column should store the day number ('1' for Monday, '2' for Tuesday etc). DAY_NAME should store the day name ('Monday' for 1, 'Tuesday' for 2 etc). Use temporary table with two columns DAY_ID and DAY_NAME which assigns a day name to every day number.

8. WITH clause:

- Display DATE, TIME, MVSBSY and LPAR_NAME columns. Use WITH clause to join LPAR_NAME column from LPAR table with the main query.

- Display count of records in which MVSBSY value is higher than 80% during the day (8:00 - 20:00) in comparison to count of records in which MVSBSY value is higher than 80% during the night (20:00 - 8:00). Use WITH clause to display both values in a single row.

- Display DATE, DAY_NBR, DAY_NAME & AVG_MVSBSY columns. AVG_MVSBSY column is an average MVSBSY value from a specific day. DAY_NBR column should store the day number ('1' for Monday, '2' for Tuesday etc). DAY_NAME should store the day name ('Monday' for 1, 'Tuesday' for 2 etc). Use temporary table with two columns DAY_ID and DAY_NAME which assigns a day name to every day number.

![]() 9. Data formating:

- Display DATE, TIME and MVSBSY columns. Reformat MVSBSY to "nnn.n" format.

- Display DATE, TIME and MVSBSY columns. Copy MVSBSY twice, format first copy as integer and the second one a decimal number in "nnn" format. What's the difference?

- Display DATE, TIME and MVSBSY columns. Copy MVSBSY four times. Format first column to 'nnn.n' decimal number. Other three columns should store floor, ceiling and rounded value in SMALLINT format.

- Display LPAR_NAME and COMPANY_NAME from LPAR table. Display only first 12 characters of COMPANY_NAME.

- Display DATE, TIME and MVSBSY columns. Format DATE to "YY/MM/DD" format.

- Display joined DATE AND TIME columns as a string in "YYYY.MM.DD-HH.MM.SS" format.

- Convert DATE and TIME fields to TIMESTAMP data type. Display DATE, TIME and TIMESTAMP columns.

- Display following columns: DATE in "YYYY.DDD" format, DATE in "DD.MM.YY" format, DATE in USA format, DATE in ISO format, TIME in "HH:MM" format, TIME in "MM.SS" format, TIME in USA format, TIME in ISO format, TIME in 'HH AM/PM' format.

9. Data formating:

- Display DATE, TIME and MVSBSY columns. Reformat MVSBSY to "nnn.n" format.

- Display DATE, TIME and MVSBSY columns. Copy MVSBSY twice, format first copy as integer and the second one a decimal number in "nnn" format. What's the difference?

- Display DATE, TIME and MVSBSY columns. Copy MVSBSY four times. Format first column to 'nnn.n' decimal number. Other three columns should store floor, ceiling and rounded value in SMALLINT format.

- Display LPAR_NAME and COMPANY_NAME from LPAR table. Display only first 12 characters of COMPANY_NAME.

- Display DATE, TIME and MVSBSY columns. Format DATE to "YY/MM/DD" format.

- Display joined DATE AND TIME columns as a string in "YYYY.MM.DD-HH.MM.SS" format.

- Convert DATE and TIME fields to TIMESTAMP data type. Display DATE, TIME and TIMESTAMP columns.

- Display following columns: DATE in "YYYY.DDD" format, DATE in "DD.MM.YY" format, DATE in USA format, DATE in ISO format, TIME in "HH:MM" format, TIME in "MM.SS" format, TIME in USA format, TIME in ISO format, TIME in 'HH AM/PM' format.

![]() 10. UNION statement:

- Use UNION statement to join ID, DATE, MVSBSY, CPUBSY and STOTOT columns from both PERF_RMF_INT and PERF_DAY_AVG tables.

- Use UNION statement to join DATE and MVSBSY columns from PERF_RMF_INT and PERF_DAY_AVG tables. How many rows are displayed? Then repeat the query using UNION ALL. What's the difference?

- Perform the same activity as above but this time include third column COUNT that shows how many records were joined.

- Use UNION ALL statement to join MVSBSY, STOTOT and LPAR_ID from PERF_DAY_AVG table into a single column.

- Create a table DOGS with 2 columns, RACE and NAME. Insert 4 rows into table using UNION ALL and SYSIBM.SYSDUMMY1. Display all rows and remove the table at the end of the script.

- Perform the same activity as above using WITH clause.

10. UNION statement:

- Use UNION statement to join ID, DATE, MVSBSY, CPUBSY and STOTOT columns from both PERF_RMF_INT and PERF_DAY_AVG tables.

- Use UNION statement to join DATE and MVSBSY columns from PERF_RMF_INT and PERF_DAY_AVG tables. How many rows are displayed? Then repeat the query using UNION ALL. What's the difference?

- Perform the same activity as above but this time include third column COUNT that shows how many records were joined.

- Use UNION ALL statement to join MVSBSY, STOTOT and LPAR_ID from PERF_DAY_AVG table into a single column.

- Create a table DOGS with 2 columns, RACE and NAME. Insert 4 rows into table using UNION ALL and SYSIBM.SYSDUMMY1. Display all rows and remove the table at the end of the script.

- Perform the same activity as above using WITH clause.

![]() 11. JOIN statement:

- Explain the difference between 5 forms of JOIN clause: INNER JOIN, LEFT OUTER JOIN, RIGHT OUTER JOIN, FULL OUTER JOIN and CROSS JOIN.

- Display LPAR_NAME, DATE and MVSBSY columns from LPAR and PERF_DAY_AVG tables using INNER JOIN on LPAR_ID column.

Create two temporary tables as shown below (remove them at the end of the query). Issue all 5 types of JOINs against them.

11. JOIN statement:

- Explain the difference between 5 forms of JOIN clause: INNER JOIN, LEFT OUTER JOIN, RIGHT OUTER JOIN, FULL OUTER JOIN and CROSS JOIN.

- Display LPAR_NAME, DATE and MVSBSY columns from LPAR and PERF_DAY_AVG tables using INNER JOIN on LPAR_ID column.

Create two temporary tables as shown below (remove them at the end of the query). Issue all 5 types of JOINs against them.

DOGS TABLE: OWNERS TABLE: ------------------------- ------------------ RACE NAME OWNER_ID OWNER_ID NAME ------------------------- ------------------ HUSKY LILLY 1 1 JONATHAN GREYHOUND JACK 1 2 DONALD YORK DUMMY 2 3 SARAH BEAGLE FLUFFY 4

- Use DOGS & OWNERS table as in previous exercise. Display only rows that cannot be joined on the basis of OWNER_ID column. In other words, subtract INNER JOIN rows from FULL OUTER JOIN rows. - Add a third table PET_SHOP as shown below. JOIN DOGS, OWNERS and PET_SHOP tables using all 5 types of JOINs.

RACE PRICE ------------- HUSKY 300.00 BEAGLE 99.99 BOXER 25.50

- Modify FULL JOIN statement from the previous exercise in a way that RACE column value is taken from either PET_SHOP or DOGS tables depending on where it is present. - JOIN all three tables in such way that all records from OWNERS and DOGS are included in the result but PRICE is added only if the RACE is present in DOGS table. - Use FULL JOIN to join all three tables but exclude records with JONATHAN owner. - Use FULL JOIN to join all three tables. This time exclude records with JONATHAN from joining operation, not from the final result. - Use JOIN, WITH, EXISTS and GROUP BY statements to cross reference two queries: dates in PERF_DAY_AVG that are also present in PERF_RMF_INT against all dates from PERF_DAY_AVG. Group records so only unique pairs are displayed.

Hint 1-11

All you need for this Assignment is “DB2 11 for z/OS: SQL Reference”. Also feel free to use online resource whenever you're stuck. SQL language is pretty flexible and sometimes there are many ways in which you can solve a particular problem.

Solution 1

- Display all records from PERF_RMF_INT table. SELECT * FROM PERF_RMF_INT ; or SELECT * FROM JSADEK.PERF_RMF_INT ; 'JSADEK' is something called Schema. Schame is a logical classification of various objects in the database. For example all objects created by you, databases, table spaces, tables, indexes etc. have your ID as Schema. Schemas simply object management and authorization. SYSIBM is a Schema of objects used by DB2 and you shouldn't used this schema for your objects. If you use SPUFI it will limit number of records retrieved by your queries. To view all records you can change “MAX SELECT LINES” and output data set characteristic. Still, remember that it's a safety measure and you should use COUNT(*) function to see the amount of records retrieved by your query. ________________________________________________________________________________ - Display all records from PERF_RMF_INT table ordering them from the highest CPU usage (CPUBSY column). SELECT * FROM PERF_RMF_INT ORDER BY CPUBSY DESC ; ________________________________________________________________________________ - Display all records where value of MVSBSY field is higher or equal to 80. SELECT * FROM PERF_RMF_INT WHERE MVSBSY>=80 ; ________________________________________________________________________________ - Display records between 8AM and 4PM from a single day. SELECT * FROM PERF_RMF_INT WHERE TIME>='08:00:00' AND TIME<='16:00:00' AND DATE='2017-08-08' ; ________________________________________________________________________________ - Display DATE, TIME and MVSBSY columns where MVSBSY value is greater than 60, sorted from the highest to lowest value. SELECT DATE,TIME,MVSBSY FROM PERF_RMF_INT WHERE MVSBSY>60 ORDER BY MVSBSY DESC ; When you create larger queries it's a good habit to start each clause in new line. It makes query more readable. ________________________________________________________________________________ - Display records in which STOTOT (used RAM) is greater or equal to 70% of STORAGE (total available RAM) value. SELECT * FROM PERF_RMF_INT WHERE STOTOT>=0.7*STORAGE ; ________________________________________________________________________________ - Display DATE, TIME, MVSBSY and IIPMBSY columns ordered by sum of MVSBSY & IIPMBSY values. Retrieve only 50 rows with highest values. SELECT DATE,TIME,MVSBSY,IIPMBSY,(MVSBSY+IIPMBSY) AS SUMBSY FROM PERF_RMF_INT ORDER BY SUMBSY DESC FETCH FIRST 50 ROWS ONLY ; ________________________________________________________________________________ - Use LACTMSU column and the data about CPC power of your system (search web for LSPR ITR ratios) to calculate MIPS usage for each record in PERF_RMF_INT table. Output should contain DATE, TIME, MVSBSY, LACTMSU and MIPS columns. Rename MVSBSY column to CPU and LACTMSU to MSU. To finish this assignment we need to know know CPC power. You can check CPC model with “D M=CPU” command and then check MIPS power for your CPC under the following link (z/OS 2.1): https://www-304.ibm.com/servers/resourcelink/lib03060.nsf/pages/lsprITRzOSv2r1?OpenDocument For example: Model: 2827.711 PCI: 13188 (PCI is basically equal to MIPS). MSU: 1593 Now we know the power of the entire processor and we also know actual MSU usage of the LPAR (LACTMSU). With this data we can easily calculate average MIPS usage for each record in PERF_RMF_INT table. 13188/1593 = 8.279 – One MSU is equal to 8.279 MIPS. SELECT DATE,TIME,MVSBSY AS CPU,LACTMSU AS MSU, (LACTMSU*8.279) AS MIPS FROM PERF_RMF_INT ; ________________________________________________________________________________ - Display all records from PERF_RMF_INT table from a single LPAR. Use LPAR_NAME column from LPAR table as selection criteria. SELECT * FROM PERF_RMF_INT WHERE LPAR_ID = ( SELECT LPAR_ID FROM LPAR WHERE LPAR_NAME='MVSA' ) ; ________________________________________________________________________________ - Display all records from PERF_RMF_INT table from a single LPAR that are older than the value specified in KEEP_RMFINT_FOR_X_DAYS field of LPAR table. SELECT * FROM PERF_RMF_INT WHERE DAYS(DATE) < DAYS(CURRENT DATE) - ( SELECT KEEP_RMFINT_FOR_X_DAYS FROM LPAR WHERE LPAR_NAME='MVS1' ) ;

Solution 2

- Use LIKE operator to display all records from LPAR table where LPAR_NAME starts with letter 'T'. SELECT * FROM LPAR WHERE LPAR_NAME LIKE 'T%' ; ________________________________________________________________________________ - Use LIKE operator to display all records from LPAR table where CUSTOMER_NAME contains string "TEST" word. SELECT * FROM LPAR WHERE COMPANY_NAME LIKE '%TEST%' ; ________________________________________________________________________________ - Use LIKE operator to display all records from LPAR table where CUSTOMER_NAME contains 'A' letter on the second position and the entire string has at least three letters. SELECT * FROM LPAR WHERE COMPANY_NAME LIKE '_A_%' ; ________________________________________________________________________________ - Use IN operator to display all records from PERF_RMF_INT table with MVSBSY value equal to 0, 5 or 10. SELECT * FROM PERF_RMF_INT WHERE MVSBSY IN (0,5,10); ________________________________________________________________________________ - Use IN operator to display records from three selected days only, for example 7, 9 and 12 of August. SELECT * FROM PERF_RMF_INT WHERE DATE IN('2017-08-07','2017-08-09','2017-08-12') ; ________________________________________________________________________________ - Use IN operator to display all records from PERF_RMF_INT table that are related to two selected LPARS (use LPAR_NAME column instead of LPAR_ID). SELECT * FROM PERF_RMF_INT WHERE LPAR_ID IN ( SELECT LPAR_ID FROM LPAR WHERE LPAR_NAME IN ('TST2','TST4') ) ; ________________________________________________________________________________ - Use EXIST statement to display rows from PERF_DAY_AVG with the DATE that's also present in any record from PERF_RMF_INT table. SELECT DATE FROM PERF_DAY_AVG D WHERE EXISTS ( SELECT * FROM PERF_RMF_INT R WHERE D.DATE = R.DATE ) ; ________________________________________________________________________________ - Use ANY operator to check which record IDs from PERF_DAY_AVG are referenced in PERF_RMF_INT table. SELECT * FROM PERF_DAY_AVG WHERE PERF_DAY_AVG_ID = ANY ( SELECT PERF_DAY_AVG_ID FROM PERF_RMF_INT ) ; Notice that '= ANY' works exactly the same way as IN operator. The difference is that with ANY you can also use other operators such as '>', '<', '<>' and so on. ________________________________________________________________________________ - Use ALL operator to check if all records in PERF_DAY_AVG from the last month are related to a single LPAR. SELECT * FROM LPAR WHERE LPAR_ID = ALL ( SELECT LPAR_ID FROM PERF_DAY_AVG WHERE DATE >= '2017-11-01' AND DATE <= '2017-11-30' ) ; ________________________________________________________________________________ - Use BETWEEN operator to display all records from PERF_RMF_INT where MVSBSY values are between 30 and 50 percent. SELECT * FROM PERF_RMF_INT WHERE MVSBSY BETWEEN 30 AND 50 ; ________________________________________________________________________________ - Use DISTINCT operator to display all dates present in PERF_RMF_INT table. SELECT DISTINCT DATE FROM PERF_RMF_INT ; DISTINCT keyword works in similar way as GROUP BY although GROUP BY allows more flexibility.

Solution 3

- Insert one row into LPAR table. Specify all fields. Use random data. INSERT INTO LPAR VALUES( 4, 'TST4', '2097-701', '2.3', '2017-11-22', 40, 40, 'TEST SYSTEM', 'SOME COMMENTS' ); When you specify all fields in the record you don't need to define columns after the table name. In such case, you need to manually check the last record in table to know what value should be set as Primary Key. You also do that with following query: SELECT MAX(LPAR_ID) FROM LPAR ; You can also use this query inside insert statement: INSERT INTO LPAR VALUES( (SELECT MAX(LPAR_ID) FROM LPAR)+1, 'TST4', ... ________________________________________________________________________________ - Insert one row into PERF_RMF_INT table. Specify mandatory fields only. Use random data. INSERT INTO PERF_RMF_INT( LPAR_ID, DATE, TIME ) VALUES( 2, '2017-11-30', '16:30:00' ); ________________________________________________________________________________ - Insert one row into PERF_RMF_INT table. Specify 4 fields: LPAR_ID, DATE, TIME & MVSBSY. LPAR_ID should store value of the last system in LPAR table. DATA & TIME should store current date and time. MVSBSY field should contain the maximum value from MVSBSY column. INSERT INTO PERF_RMF_INT( LPAR_ID, DATE, TIME, MVSBSY ) VALUES( (SELECT MAX(LPAR_ID) FROM LPAR), CURRENT DATE, CURRENT TIME, (SELECT MAX(MVSBSY) FROM PERF_RMF_INT) ); ________________________________________________________________________________ - Calculate average value for all columns from PERF_RMF_INT table that are present in PERF_DAY_AVG table. Average should be calculated from a single system and a single day. Insert the result into PERF_DAY_AVG table. INSERT INTO PERF_DAY_AVG ( LPAR_ID , DATE , CPUBSY , IIPBSY , MVSBSY , IIPMBSY , AVGWUCP , AVGWUIIP , WDEFL , WDEFLIIP , WACTL , LDEFMSU , LACTMSU , STOTOT , TPAGRT , IORPII , IOPALB ) SELECT AVG(LPAR_ID) , DATE , AVG(CPUBSY) AS CPUBSY , AVG(IIPBSY) AS IIPBSY , AVG(MVSBSY) AS MVSBSY , AVG(IIPMBSY) AS IIPMBSY , AVG(AVGWUCP) AS AVGWUCP , AVG(AVGWUIIP) AS AVGWUIIP , AVG(WDEFL) AS WDEFL , AVG(WDEFLIIP) AS WDEFLIIP , AVG(WACTL) AS WACTL , AVG(LDEFMSU) AS LDEFMSU , AVG(LACTMSU) AS LACTMSU , AVG(STOTOT) AS STOTOT , AVG(TPAGRT) AS TPAGRT , AVG(IORPII) AS IORPII , AVG(IOPALB) AS IOPALB FROM PERF_RMF_INT WHERE LPAR_ID=1 AND DATE='2017-10-30' GROUP BY DATE ; ________________________________________________________________________________ - Insert four rows into PERF_RMF_INT table in a single query. Specify mandatory fields only. Use random values. INSERT INTO PERF_RMF_INT( LPAR_ID, DATE, TIME ) SELECT 2,'2017-11-30','16:30:00' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 3,'1997-01-12','04:00:00' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 1,'2016-12-11','11:30:00' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 2,'2011-07-22','09:31:43' FROM SYSIBM.SYSDUMMY1 ; In DB2 VALUES keyword is used for a single row insert. If you need to insert multiple rows at a time you need to use SELECT sub-query. In this example we don't use any existing table but use SYSIBM.SYSDUMMY1 and UNION ALL to create a small temporary table that stores values we want to insert.

Solution 4

- Set KEEP_RMFINT_FOR_X_DAYS value in the last record of LPAR table to 60. UPDATE LPAR SET KEEP_RMFINT_FOR_X_DAYS = 60 WHERE LPAR_ID=6 ; ________________________________________________________________________________ - Nullify COMMENTS field in all records of LPAR table. UPDATE LPAR SET COMMENTS = NULL ; As you can see changing value of some column in rows is way too easy and you should be careful to not forget about WHERE clause. To avoid such mistakes it's a must have habit to always use SELECT clause before issuing UPDATE or DELETE. This way you'll test your selection criteria beforehand and you'll be always sure what records will be updated/deleted. Also, notice that NULL value is different than COMMENTS='' - in this case you'll update records with an empty string, not with NULL value. Some programs or SQL scripts test for NULL values and may behave differently against NULL and ''. ________________________________________________________________________________ - Use INSERT statement to copy first 10 records from yesterday from PERF_RMF_INT table. Next, update MVSBSY and AVGWUCP columns so the copied rows contain ceiling of their original values. Step 1: Figuring out selection criteria: SELECT * FROM PERF_RMF_INT WHERE JULIAN_DAY(DATE) = JULIAN_DAY(CURRENT DATE)-1 FETCH FIRST 10 ROWS ONLY ; Step 2: Copying selected rows: INSERT INTO PERF_RMF_INT ( PERF_DAY_AVG_ID , LPAR_ID , DATE , TIME , CPUBSY , IIPBSY , MVSBSY , IIPMBSY , AVGWUCP , AVGWUIIP, NLDEFL , NLDEFLCP, NLDEFLIP, NLACTL , NLACTLCP, NLACTLIP, WDEFL , WDEFLIIP, WACTL , LDEFMSU , LACTMSU , WCAPPER , INICAP , LIMCPU , STOTOT , STORAGE , TPAGRT , PAGERT , PGMVRT , CSTORAVA, AVGSQA , AVGCSAF , AVGCSAT , IOPIPB , IORPII , IOPALB ) SELECT PERF_DAY_AVG_ID , LPAR_ID , DATE , TIME , CPUBSY , IIPBSY , MVSBSY , IIPMBSY , AVGWUCP , AVGWUIIP, NLDEFL , NLDEFLCP, NLDEFLIP, NLACTL , NLACTLCP, NLACTLIP, WDEFL , WDEFLIIP, WACTL , LDEFMSU , LACTMSU , WCAPPER , INICAP , LIMCPU , STOTOT , STORAGE , TPAGRT , PAGERT , PGMVRT , CSTORAVA, AVGSQA , AVGCSAF , AVGCSAT , IOPIPB , IORPII , IOPALB FROM PERF_RMF_INT WHERE JULIAN_DAY(DATE) = JULIAN_DAY(CURRENT DATE)-1 FETCH FIRST 10 ROWS ONLY ; Unfortunately we cannot copy entire rows because of Primary Key constraint. Primary Keys cannot be duplicated and therefore copied. In order to omit PERF_RMF_INT_ID column we must specify every other column separately. Step 3: Updating values: UPDATE PERF_RMF_INT SET MVSBSY=CEILING(MVSBSY) , AVGWUCP=CEILING(AVGWUCP) WHERE PERF_RMF_INT_ID >= 12047 AND PERF_RMF_INT_ID <= 12056 ; When updating arrays of values you should be careful not to update additional rows, here is the save way to do that. You can use SELECT statement to display all rows that needs to be modified, then you can refer to them by using Primary Key, this way you're sure you won't update anything else. Of course, also test this selection criteria with SELECT, mistaking '<' for '>' may be quite unfortunate in such case. ________________________________________________________________________________ - UPDATE MVSBSY field in the last row of PERF_RMF_INT table. It should store average value of the entire MVSBSY column. First let's test selection criteria: SELECT * FROM PERF_RMF_INT WHERE PERF_RMF_INT_ID = (SELECT MAX(PERF_RMF_INT_ID) FROM PERF_RMF_INT) ; Now we can update selected row as needed: UPDATE PERF_RMF_INT SET MVSBSY = (SELECT AVG(MVSBSY) FROM PERF_RMF_INT) WHERE PERF_RMF_INT_ID = (SELECT MAX(PERF_RMF_INT_ID) FROM PERF_RMF_INT) ;

Solution 5

- Delete all records from a single day. DELETE FROM PERF_RMF_INT WHERE DATE='2017-12-07' ; Just like in case of update before issuing DELETE statement make sure you issue SELECT statement with the same selection criteria, this way you'll be sure that you'll delete exactly what you want. SELECT * FROM PERF_RMF_INT WHERE DATE='2017-12-07' ; If you want to test final DELTE statement but don't yet make permanent changes you can also use ROLLBACK statement: DELETE FROM PERF_RMF_INT WHERE DATE='2017-12-07' ; ROLLBACK ; ________________________________________________________________________________ - Delete all records where PERF_DAY_AVG_ID is NULL. DELETE FROM PERF_RMF_INT WHERE PERF_DAY_AVG_ID IS NULL; ________________________________________________________________________________ - Delete all records from PERF_RMF_INT table with DATE that's not present in any record of PERF_DAY_AVG table. DELETE FROM PERF_RMF_INT WHERE DATE NOT IN(SELECT DATE FROM PERF_DAY_AVG) ;

Solution 6

- Display DATE, TIME and DAYS column where DAYS stores number of days between values in DATE column and the current date. SELECT DATE, TIME, (DAYS(CURRENT DATE) - DAYS(DATE)) AS DAYS FROM PERF_RMF_INT ; ________________________________________________________________________________ - Display total number of records stored in PERF_RMF_INT table. SELECT COUNT(*) AS NBR_OF_ROWS FROM PERF_RMF_INT ; ________________________________________________________________________________ - Display number of records in which MVSBSY value is higher than 80% during the day (8:00 - 20:00). SELECT COUNT(*) AS HIGH_CPU_DAY FROM PERF_RMF_INT WHERE MVSBSY > 80 AND TIME >= '08:00:00' AND TIME < '20:00:00' ; ________________________________________________________________________________ - Display DATE, AVG_MVSBSY and DAY_OF_WEEK columns. AVG_MVSBSY column should display an average of all values in MVSBSY column from a single day. DAY_OF_WEEK column should display day number (1 for Monday, 2 for Tuesday etc.). SELECT DATE,AVG(MVSBSY) AS AVG_MVSBSY, DAYOFWEEK_ISO(DATE) AS DAY_OF_WEEK FROM PERF_RMF_INT GROUP BY DATE ; Notice the difference between DAYOFWEEK_ISO and DAYOFWEEK functions. IN DAYOFWEEK Sunday is the first day of the week. ________________________________________________________________________________ - Display DATE, TIME, MVSBSY & DATE_STR columns. Use YEAR, DAYOFYEAR and TO_CHAR functions to convert the date to 'YYYY.DDD' format in DATE_STR column. SELECT DATE,TIME,MVSBSY, (TO_CHAR(YEAR(DATE)) !! '.' !! TO_CHAR(DAYOFYEAR(DATE))) AS DATE_STR FROM PERF_RMF_INT ; '||' chars or '!!' depending on your terminal character coding is a string concatenation character. U can also use CONCAT keyword instead. ________________________________________________________________________________ - Use DIGITS function against PERF_RMF_INT_ID, MVSBSY & STOTOT columns. SELECT DIGITS(PERF_RMF_INT_ID) AS DIG_ID, DIGITS(MVSBSY) AS DIG_MVSBSY, DIGITS(STOTOT) AS DIG_STOTOT FROM PERF_RMF_INT ; ________________________________________________________________________________ - Use MONTHS_BETWEEN function to calculate how many months passed since April 1921 to December 2013. SELECT MONTHS_BETWEEN('1921-04-01','2013-12-01') AS MONTHS FROM SYSIBM.SYSDUMMY1 ; This is also a good example how you can use SQL function with SYSIBM.SYSDUMMY1 to calculate something not related to any existing table. ________________________________________________________________________________ - Convert all zeroes in MVSBSY column to 'X' characters. SELECT TRANSLATE(TO_CHAR(MVSBSY),'X','0') AS WTF_COL FROM PERF_RMF_INT ; ________________________________________________________________________________ - Convert spaces in COMPANY_NAME column in LPAR table. First, trim both leading and trailing blanks. Then, convert every space to '-' character. At the end, display COMPANY_NAME on 30 chars adding '.' as filler appearing after the string. For example " TEST COMPANY " should be displayed as "TEST-COMPANY..................". SELECT COMPANY_NAME, RPAD(TRANSLATE(TRIM(COMPANY_NAME),'-',' '),30,'.') AS EDITED_NAME FROM LPAR ; ________________________________________________________________________________ - Display MVSBSY column values as follows ">>>>>>>>>3.0<<<<<<<<". Field should have 20 chars and the value should be in the middle of the string. SELECT MVSBSY, OVERLAY('>>>>>>>>>><<<<<<<<<<',TRIM(MVSBSY), CEILING((11-LENGTH(TRIM(MVSBSY))/2)), LENGTH(TRIM(MVSBSY)),OCTETS) AS EDITED_MVSBSY FROM PERF_RMF_INT ; ________________________________________________________________________________ - Display all records in which MVSBSY column that contains '8' number anywhere in its value, for instance '8.0', '38.1' or '12.8'. SELECT DATE,TIME,MVSBSY FROM PERF_RMF_INT WHERE LOCATE('8',MVSBSY) <> 0 ; LOCATE is a universal function which can be used for text search but there is also a DB2 add-on 'text search support' which provides new, more powerful functions such as CONTAINS or SCORE.

Solution 7

- Display DATE, MVSBSY and STOTOT columns. Calculate average values of MVSBSY and STOTOT columns so one record represents a single day. SELECT DATE, AVG(MVSBSY), AVG(STOTOT) FROM PERF_RMF_INT GROUP BY DATE ; ________________________________________________________________________________ - Display DATE and INT_CNT columns where INT_CNT is the number of records for each day. SELECT DATE,COUNT(*) AS INT_CNT FROM PERF_RMF_INT GROUP BY DATE ; ________________________________________________________________________________ - Display DATE, MVSBSY and LACTMSU columns. Calculate average of MVSBSY column and sum of LACTMSU column so one row represents one day. Display only days in which all intervals are present. Depending on RMF setting one interval is saved each 10 or 15 minutes. By checking for interval number we can exclude days with missing RMF data. - 10 min – 144 intervals. - 15 min – 96 intervals. SELECT DATE, AVG(MVSBSY), SUM(LACTMSU) FROM PERF_RMF_INT GROUP BY DATE HAVING COUNT(*) = 96 ; ________________________________________________________________________________ - Display DATE, MVSBSY and LACTMSU columns. Calculate average of MVSBSY column and sum of LACTMSU column so one row represent working hours of a single day (8AM – 4PM). SELECT DATE, AVG(MVSBSY), SUM(LACTMSU) FROM PERF_RMF_INT WHERE TIME>='08.00.00' AND TIME<='16.00.00' GROUP BY DATE ; ________________________________________________________________________________ - Use LACTMSU column and the data about CPC power of your system (LSPR ITR ratios) to calculate MIPS usage for each record in PERF_RMF_INT table. Calculate sum of MSU column and the average of CPU and MIPS columns for each day. Also, add another column INT_CNT which shows how many records are present for each day. SELECT DATE,AVG(MVSBSY) AS CPU_AVG,SUM(LACTMSU) AS MSU_SUM, AVG((LACTMSU*8.279)) AS MIPS_AVG,COUNT(*) AS INT_CNT FROM PERF_RMF_INT GROUP BY DATE ; ________________________________________________________________________________ - Delete records from PERF_DAY_AVG table that don't have all corresponding intervals (records with the same date) in PERF_RMF_INT table (96 or 144 records depending on RMF setting) but only if those records are from the last 7 days. Due to Foreign Key constraint you'll also have to remove all records from PERF_RMF_INT table that reference records from PERF_DAY_AVG you want to remove. First let's test selection criteria: SELECT * FROM PERF_DAY_AVG WHERE JULIAN_DAY(DATE) >= JULIAN_DAY(CURRENT DATE)-7 AND DATE IN (SELECT DATE FROM PERF_RMF_INT GROUP BY DATE HAVING COUNT(*) <> 96); There are few solutions to that problem, here is the first one: CREATE TABLE TEMPTBL AS ( SELECT DATE FROM PERF_RMF_INT ) WITH NO DATA IN DATABASE JANSDB CCSID ASCII ; INSERT INTO TEMPTBL SELECT DATE FROM PERF_RMF_INT GROUP BY DATE HAVING COUNT(*) <> 96 ; DELETE FROM PERF_RMF_INT WHERE JULIAN_DAY(DATE) >= JULIAN_DAY(CURRENT DATE)-7 AND DATE IN (SELECT DATE FROM TEMPTBL GROUP BY DATE HAVING COUNT(*) <> 96); DELETE FROM PERF_DAY_AVG WHERE JULIAN_DAY(DATE) >= JULIAN_DAY(CURRENT DATE)-7 AND DATE IN (SELECT DATE FROM TEMPTBL GROUP BY DATE HAVING COUNT(*) <> 96); DROP TABLE TEMPTBL ; ROLLBACK ; ROLLBACK statement enables you to test DELETE or any other statement without making actual changes to the database. In this solution, we've created a temporary table that stores dates with incomplete interval count. Next, we use it in both DELETE statements. We need temporary table because after removing records from PERF_RMF_INT SELECT sub-query in the second DELETE clause won't extract the data about days with incomplete interval count, after all, we've just removed all those records. The second, simpler solution: DELETE FROM PERF_RMF_INT WHERE JULIAN_DAY(DATE) >= JULIAN_DAY(CURRENT DATE)-7 AND DATE IN (SELECT DATE FROM PERF_RMF_INT GROUP BY DATE HAVING COUNT(*) <> 96); DELETE FROM PERF_DAY_AVG WHERE JULIAN_DAY(DATE) >= JULIAN_DAY(CURRENT DATE)-7 AND DATE NOT IN (SELECT DATE FROM PERF_RMF_INT GROUP BY DATE HAVING COUNT(*) = 96); In here, the second DELETE statement checks if there are any records in PERF_DAY_AVG with DATE with which there are no 96 records in PERF_DAY_AVG table. In result, we'll also remove all records from PERF_DAY_AVG with no corresponding PERF_RMF_INT records. In the first solution PERF_DAY_AVG must have had at least one corresponding record in PERF_RMF_INT table if it is to be removed. In following test there is one record in PERF_DAY_AVG table with the DATA which is not present in any of the records in PERF_RMF_INT table: The first solution: NUMBER OF ROWS AFFECTED IS 3 – record not removed. The second solution: NUMBER OF ROWS AFFECTED IS 4 – record removed. In this assignment this difference doesn't matter but there are cases in which it matters a lot. This example nicely shows how small difference in SQL logic makes a big difference so you should be always careful when updating database in any way.

Solution 8

- Display DATE, TIME, MVSBSY and LPAR_NAME columns. Use WITH clause to join LPAR_NAME column from LPAR table with the main query. WITH TLPAR AS ( SELECT * FROM LPAR ) SELECT P.DATE,P.TIME,P.MVSBSY,L.LPAR_NAME FROM PERF_RMF_INT P,TLPAR L WHERE P.LPAR_ID = L.LPAR_ID ; Notice that if you'll remove WHERE clause each record is duplicated many times. If PERF_RMF_INT table has 1000 records and LPAR table 10 records you should get 1000 records but without WHERE clause you get 1000*10 records. Of course in this case we didn't need WITH clause. Following statement works the same way but we can see here what WITH clause is all about – it enables us to create one or more temporary tables we can use later in the main query. An easier way: SELECT P.DATE,P.TIME,P.MVSBSY,L.LPAR_NAME FROM PERF_RMF_INT P,LPAR L WHERE P.LPAR_ID = L.LPAR_ID ; ________________________________________________________________________________ - Display count of records in which MVSBSY value is higher than 80% during the day (8:00 - 20:00) in comparison to count of records in which MVSBSY value is higher than 80% during the night (20:00 - 8:00). Use WITH clause to display both values in a single row. WITH TDAY AS ( SELECT COUNT(*) AS HIGH_CPU_DAY FROM PERF_RMF_INT WHERE MVSBSY > 0 AND TIME >= '08:00:00' AND TIME < '20:00:00' ) , TNIGHT AS ( SELECT COUNT(*) AS HIGH_CPU_NIGHT FROM PERF_RMF_INT WHERE MVSBSY > 0 AND (TIME >= '20:00:00' OR TIME < '08:00:00') ) SELECT HIGH_CPU_DAY,HIGH_CPU_NIGHT FROM TDAY,TNIGHT; ________________________________________________________________________________ - Display DATE, DAY_NBR, DAY_NAME & AVG_MVSBSY columns. AVG_MVSBSY column is an average MVSBSY value from a specific day. DAY_NBR column should store the day number ('1' for Monday, '2' for Tuesday etc). DAY_NAME should store the day name ('Monday' for 1, 'Tuesday' for 2 etc). Use temporary table with two columns DAY_ID and DAY_NAME which assigns a day name to every day number. WITH TMP (DAY_ID, DAY_NAME) AS( SELECT 1,'MONDAY' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 2,'TUESDAY' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 3,'WEDNESDAY' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 4,'THURSDAY' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 5,'FRIDAY' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 6,'SATURDAY' FROM SYSIBM.SYSDUMMY1 UNION ALL SELECT 7,'SUNDAY' FROM SYSIBM.SYSDUMMY1 ) SELECT P.DATE,DAYOFWEEK_ISO(P.DATE) AS DAY_OF_WEEK, T.DAY_NAME,AVG(P.MVSBSY) FROM PERF_RMF_INT P,TMP T WHERE DAYOFWEEK_ISO(P.DATE) = T.DAY_ID GROUP BY P.DATE,T.DAY_NAME ; With use of SYSIBM.SYSDUMMY1 and UNION ALL you can create a new table that will exist only during the execution of the query. This is a useful trick whenever you need to add a new column to the query in which values depend on another column like in this example.

Solution 9